Be sure to read all three articles in this series:

Part 1 Part 2 Part 3

Playtests generate a lot of data. From interviews alone, you may have hours of players' thoughts and opinions to wade through. This can feel overwhelming, and it’s easy to get bogged down. How do we make sense of that, and work out what really matters?

In the previous article in this series, we looked at how to make sure that players shared useful and relevant information with us. In this article we’ll look at the most efficient and effective ways to find the meaning hidden inside your interview data, and how to share reliable conclusions with your team.

Capturing what was said

While interviewing players, good moderation requires actively listening to the player, and responding to what they said in order to ask insightful follow-up questions. This means we probably didn’t take detailed notes during the interview itself.

We need to go back and take good notes - capturing the quotes that really represent the player’s meaning.

Before diving into your data, remind yourself of the objectives of the playtest - what did you want to learn? This will tune your brain into knowing what you’re looking for. Then we need to review what was said in the session.

One approach for taking notes, particularly if short on time, is to review the transcript of each session. PlaytestCloud automatically generates transcripts for each interview, which can be viewed in the video player or downloaded as a text file. You can then read through the transcript and copy and paste every relevant quote or point into an annotation or a separate document.

If you have the time, you might also want to take the videos back and take notes based on the recording of the session. This may help you recognise the nonverbal cues, and context, to get a fuller understanding of what the participant meant than conveyed by the transcript alone.

If you are watching the video back, taking notes with PlaytestCloud’s annotation feature will make it easy to create video clips and highlight reels later. If you prefer an Excel-based analysis flow, you can also export all annotations as an Excel file, together with links to the related sections of the video.

Regardless of how you approach creating the notes, our goal is to create a list of relevant quotes or observations from each player. We can then explore this list of quotes to discover the themes.

Theming your data

We now need to find the patterns hidden within their responses. This is much easier when done on a whiteboard or with mind mapping software like Miro. However, theming can also be done with real post-its, or using spreadsheet software (it just might be a bit slower!).

We first need to break the notes down into their smallest component parts. If you’re using whiteboarding software, copy and paste your list of notes into it.

Then review each note. If one post-it has multiple ideas on it, split them into multiple post-its.

For example a player might have said “This game was too difficult because I didn’t know what the power-ups did, and I didn’t know where to go to end the level”. That sentence has two issues within it (the power-ups, and the end of level), and so needs to be split.

We’re looking to make sure that each post-it (or point on a mindmap) contains only a single idea.

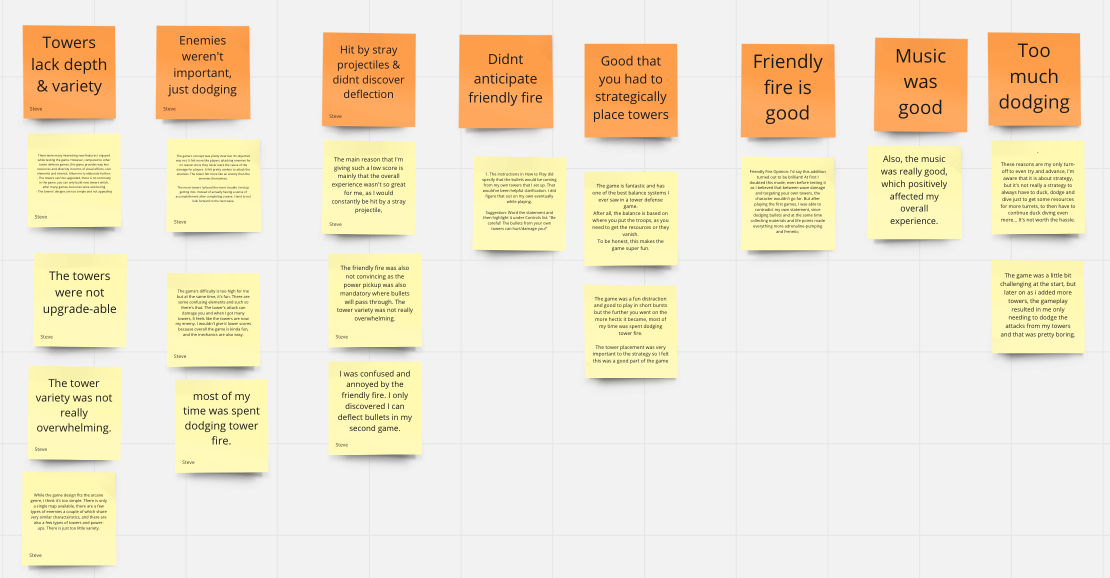

Once broken down, we need to re-group them by theme. Read each note and put it with notes from other players that make the same (or similar) points. Then finish by writing a label for each group. The label should interpret the points within it, and describe the pattern you’ve observed in that group.

This could look like this:

We now have a list of all of the themes that emerged from the interview, and quotes below them from our interviews.

Picking the top themes

Depending on how long your interviews are, there may be thirty or more themes that come up from the data. That’s too many to handle at once - if we took this back to the rest of the development team, they’d be overwhelmed. We need to decide which themes are most important to deal with first.

Prioritization for ‘which themes are most important’ should be based on your objectives - what did you hope to learn from the playtest? Review each objective in turn and decide which themes best answer that question.

For example, if an objective was to discover ‘why did players think this game was hard’, I’d prioritize all of the themes that give an idea why players felt it was too hard.

Put all of those themes that answer an objective together with the objective on the mindmap.

Because interviews are ‘qualitative’ data (which goes deep into understanding experiences), we’re not so interested in filtering comments by ‘how many people said it’. Take a look at which comments came up frequently, because there probably are interesting things there, but don’t automatically disregard comments that only one or two players said.

Once you’ve got answers for each of your research objectives, review the remaining themes and check that players aren’t trying to tell you important things that don’t happen to be one of your existing research objectives. If so, there might be important things about your game that aren’t even on your radar, that you want to include in your conclusions.

Explaining the conclusions and convincing your team

At this point, you’ll have some themes that provide answers to your research objectives. Sometimes that’s enough - if you’re running these interviews for your own game, and are able to make design decisions by yourself, your themed notes will be enough to make changes to your game.

Much more commonly though games are made by teams, and the results from the interview will need to be communicated to a wider group of people.

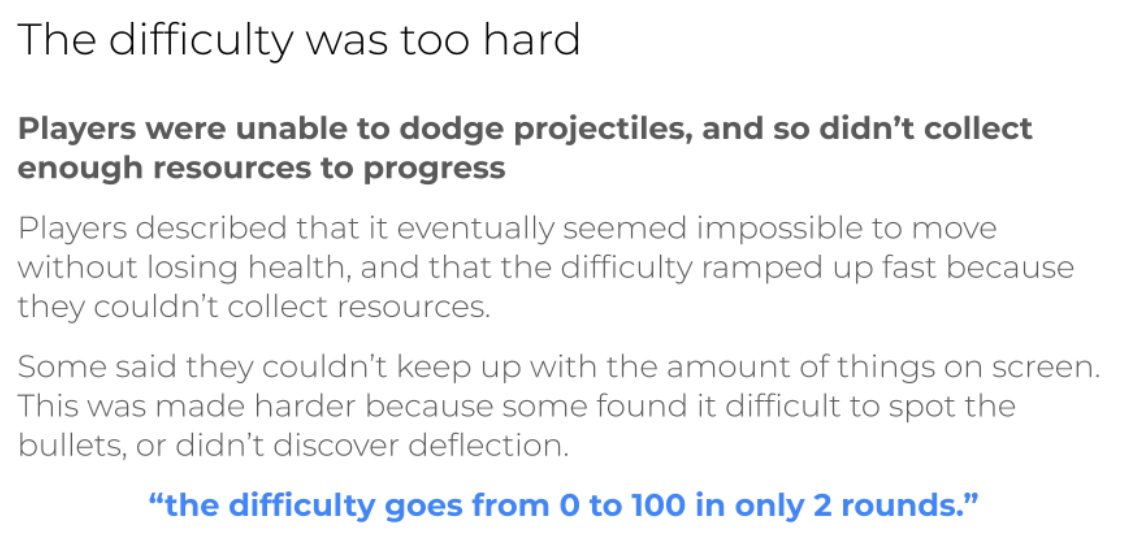

Making a report is one way of doing this. That can include a section for each objective, and then a slide for each of the themes within that objective.

On that slide, explain what the theme was, and why players were saying it. In this example the section is about difficulty, and this theme is about dodging projectiles.

Currently the slide is quite basic. We can make it a lot more convincing by adding video clips of players talking about the theme (which the video reels feature on PlaytestCloud will make easy to find and cut together). These can then be embedded into the report.

Using real footage of players describing their opinions and behavior creates a deeper empathy and understanding than just text alone, and so is highly recommended when sharing results with difficult team members (or funders!). It also reduces the risk of your report being challenged - it is objectively true that players said these things, and you have the videos to prove it.

Once you have a collection of compelling slides for each research objective, put them together in an order that makes sense, and encourages storytelling - convincing people of the need to act on your conclusions. More guidance on how to make a report is on the PlaytestCloud blog.

Interviews are one of the most rich sources of information about player behavior and motivation, and are a crucial tool for making games that players love. They are useful from before the earliest prototype (to help understand your target players), through to post launch (to identify and explain retention issues). I hope that with this series you feel prepared to apply them to your next game project.

Tags:

January 31, 2023 at 6:00 AM